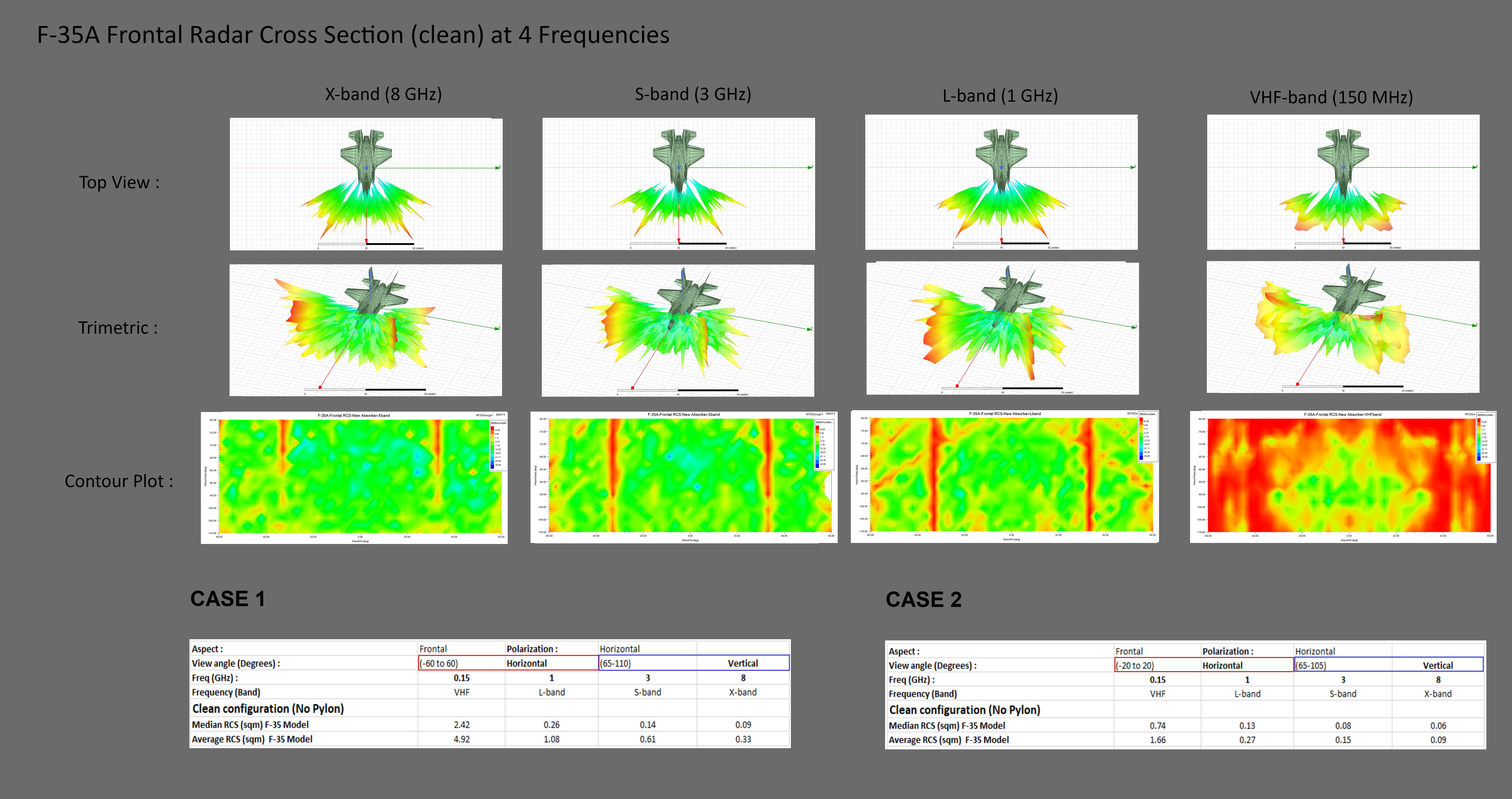

Well, the thing is that we will never know the true RCS, none of the US, China and Russia would give out the RCS in details figures instead a rough number, and in compare with those numbers, this works definately represent more 'level of truth'. Like the very early AU work, some details like the reflection angles and the comparision results between Case 1 and 2 means more than just a RCS number, we may even get some kind of conclusion that J-20 performs better in long range with radar search mode, whereas F-35 does better against short range 'staring' mode.I think in general there's some value with this kind of exercise, but where I hesitate to go as far as "represent some level of truth" is that 1) accuracy of simulations are going to be pretty sensitive to model roughness, 2) as stealth materials and modeling has gotten better general shaping has become less deterministic in assessing RCS capability. It's still the principal factor, but it accounts for less than it used to.

Also, things like roughness or craftsmanship may not even able to be modelled in reality due the complexitiness, but that doesn't mean there is no value or 'truth value' of a model, in fact building a model is common in almost every science and engineering branchs. There are numoerious cases that important variables could not be modelled but instead using a number of experience to estimate. That's why I said:

you can take it further as, ok, F-35 probabaly has the best craftsmanship, so RCS would more close to this number, J-20 may be slight worse, so give a let's say 5% penaty, Su-57 has a visiable fan blade and tones of uneven screws, let's penalize it even more. Despite this would eveantually become a judgemental call, but at least give us a base line on how to evaluate the case, and this the value or the truth of this work.J-20 has roughly same level of steath level as F-35, and Su-57, despite it's the worst, but not as horrible as i thought

Last edited: